The Path To Autonomous AI Agents Through Agent-Computer Interfaces (ACI)—Onward To Web 4.0

Examining the parallels between generative AI agents and consumers

Thank you to John Yang for the inspiration on this article, and thank you to my reviewers Yijin Wang, Kevin Zhang, Hanyi Du, Robin Huang, Andrew Tan, Marc Mengler, Sheng Ho, Phillip An, Nadja Reischel, and Luc de Leyritz!

Table of Contents

Introduction

One of the hottest topics since large language models (LLMs) became mainstream has been that of generative AI agents. Agents are entities (whether software or hardware) designed to perceive their environment and take actions to achieve specific goals. In the context of language models (LMs), Harrison Chase (LangChain founder) suggests that a generative AI agent is when an LM decides the control flow of an application to achieve a specific purpose, as opposed to an application that carries out a fixed sequence of events, as in the case of software robots or scripts.

Most people see LLM-powered AIs as tools and creation engines only. Yet, as AI agents become increasingly powerful, autonomous, and interactive with existing tools and software, some of them should also be considered a new category of end users and consumers. This shift sets the stage for Web 4.0. Where Web 3.0 was characterized by ownership, decentralization, and the semantic web, Web 4.0 will be defined by AI operability and agentic markets. In Web 4.0, the interaction of AI agents with the internet, other agents, and humans will drive entirely new economic activities and enable new modes of monetizing content, services, and applications.

In this article, I will outline what I see as the most likely vision for Web 4.0 by examining:

Why some AI agents are consumers

How AI agents being consumers is currently a problem

Why allowing AI agents to spend will lead to new economies and define Web 4.0

Why agent-computer interfaces (ACI) are needed for Web 4.0 to come to life

Where possible, I will provide data points and examples from recent LLM research. We’ve got a long article here so strap in.

Generated using Shadow AI.

Generative AI Agents as End Users

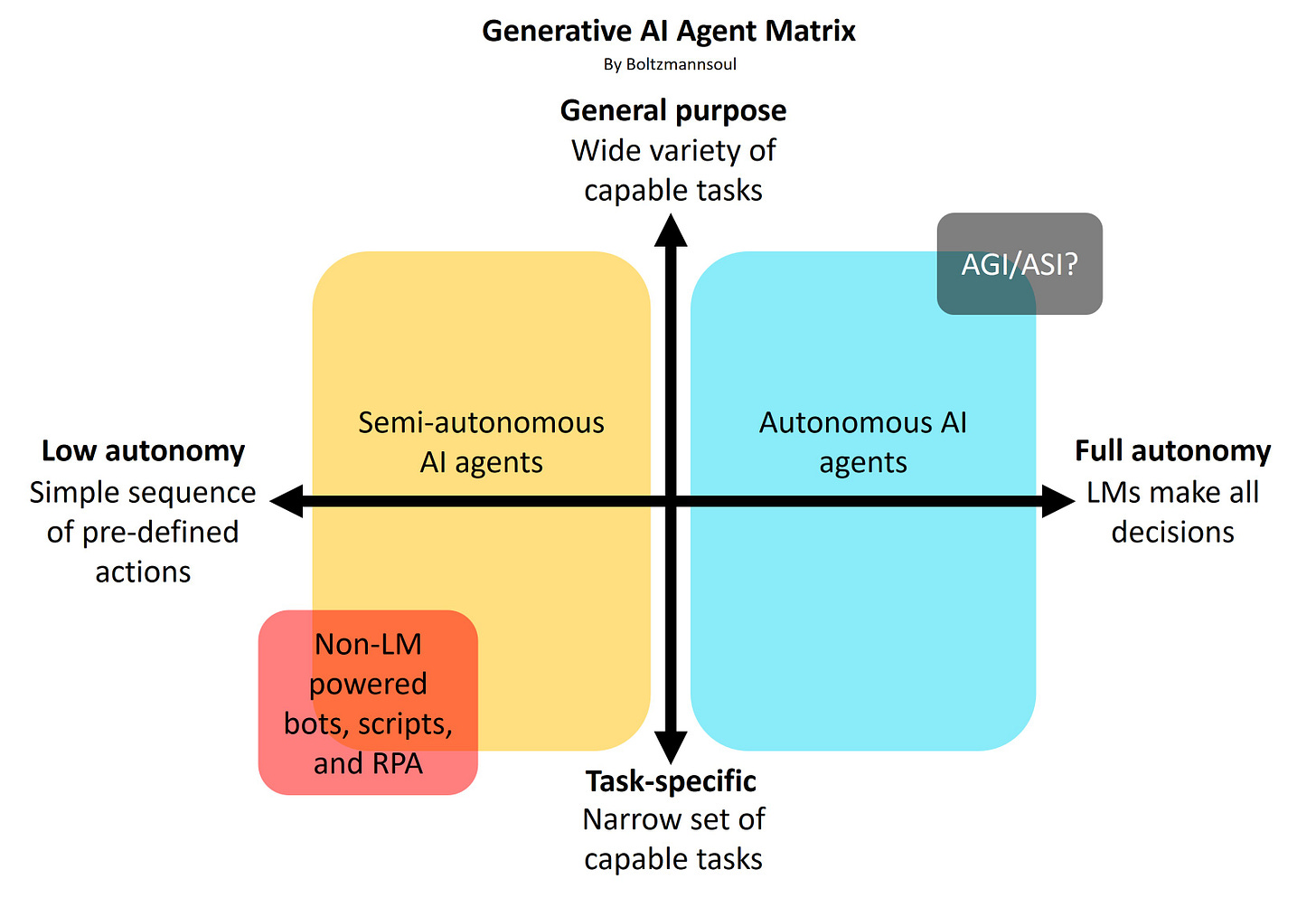

To set some context, I will use the term generative AI agents to refer to language model (LM) powered applications acting with some arbitrary degree of autonomy to execute tasks. Generative AI agents can cover a spectrum that spans simple predefined sequences of actions to full autonomy.

Fig.1: An autonomy-task matrix for generative AI agents.

As agents take on more complicated tasks, they will tend to shift more to the side of full autonomy, with the LM making more decisions on what to do, how, and when. Typically, complex tasks would see agents use existing applications to be completed. For example, agents may use the Linux Shell or Python interpreter to help complete programming tasks. A shift towards autonomy would also implicitly suggest that the agent would take on the lion’s share of utilizing the applications as an end user, while whoever (or whatever) deployed the agent itself would mostly deal with the output, result, or consequence. This has been an emerging perspective among LLM researchers and agent builders. Yet, existing applications are designed for humans, not agents.

The SWE-agent paper has been one of the first to present this view formally, and in doing so John Yang et al. designed “agent-computer interfaces” (ACIs) that significantly enhanced agent performance in software engineering, similarly to how IDEs help improve human coding performance.

Fig.2: Example ACI as an agent-specific interface, much like UIs for for humans to interact with computers. Figure reproduced from the SWE-agent paper (May 2024).

The authors demonstrated that an agent’s software engineering performance can increase significantly by designing specialized ACIs, and the result was applicable across different language models.

Table 1: Coding agent performance on SWE-bench between GPT-4 and Claude 3 using RAG vs Shell-only vs. ACI (SWE-agent). Table reproduced from the SWE-agent paper (May 2024).

I believe this will hold true, if not become even more relevant, as LMs get more powerful and agents continue to shift toward full autonomy. The lack of agent-specific interfaces for applications will hold back agent capabilities.

AI Agents as Consumers

A natural extension to viewing AI agents as end users is to view agents as their own class of consumers. Without getting into the semantics on the definition of consumers, existing agents are already consuming goods (in the form of information and energy) and services (in the form of applications and APIs). LLMs have been making headlines for a while in terms of energy consumption, whether it pertains to the energy requirement for training or inference. They have also been gaining increasing attention on content consumption, wherein they take existing real-time content and summarize it for downstream users. Agents navigating and carrying out complex tasks would also be consuming content and information to inform their subsequent decisions, with those inputs possibly never making it to a human directly.

Today, generative AI agents are still far from being fully autonomous, but there is no doubt that as they become more capable, they will consume a greater quantity and diversity of goods and services. Much like in the case of existing applications, current economic systems and business models are only designed with human consumption in mind. The lack of infrastructure to address AI consumption and AI-business interactions poses a problem.

Problem: Consumption Without Spend

Consumers are an important economic driver, and in several economic theories, consumer spending fuels economic growth. Yet, in the current context of AI agents as consumers, agents are consuming but not spending. Online monetization strategies like ads, subscription plans, and donations are designed around human spenders, and these will need to evolve. The lack of appropriate internet infrastructure and economic systems to accomodate agents is misaligning incentives and seemingly giving foundational model (FM) vendors and agent providers the illusion that their agents may consume without spending. One need only look at the growing list of ongoing AI copyright lawsuits to see this.

While scraping content without permission for model training has been a problem for some time, there has been a recent surge in attention on agents scraping and provisioning real-time content without permission, for example in the case of Perplexity AI. Currently, most AI agents that can interact with the web function as glorified—and often unwelcome—scraper bots or otherwise require their organizations to broker content deals, instruction-tune models, and build specialized integrations. Web developers, writers, and content creators face significant challenges as AI-driven content scraping and bot traffic continue to surge. As AI agents proliferate, their existing revenue models will break down.

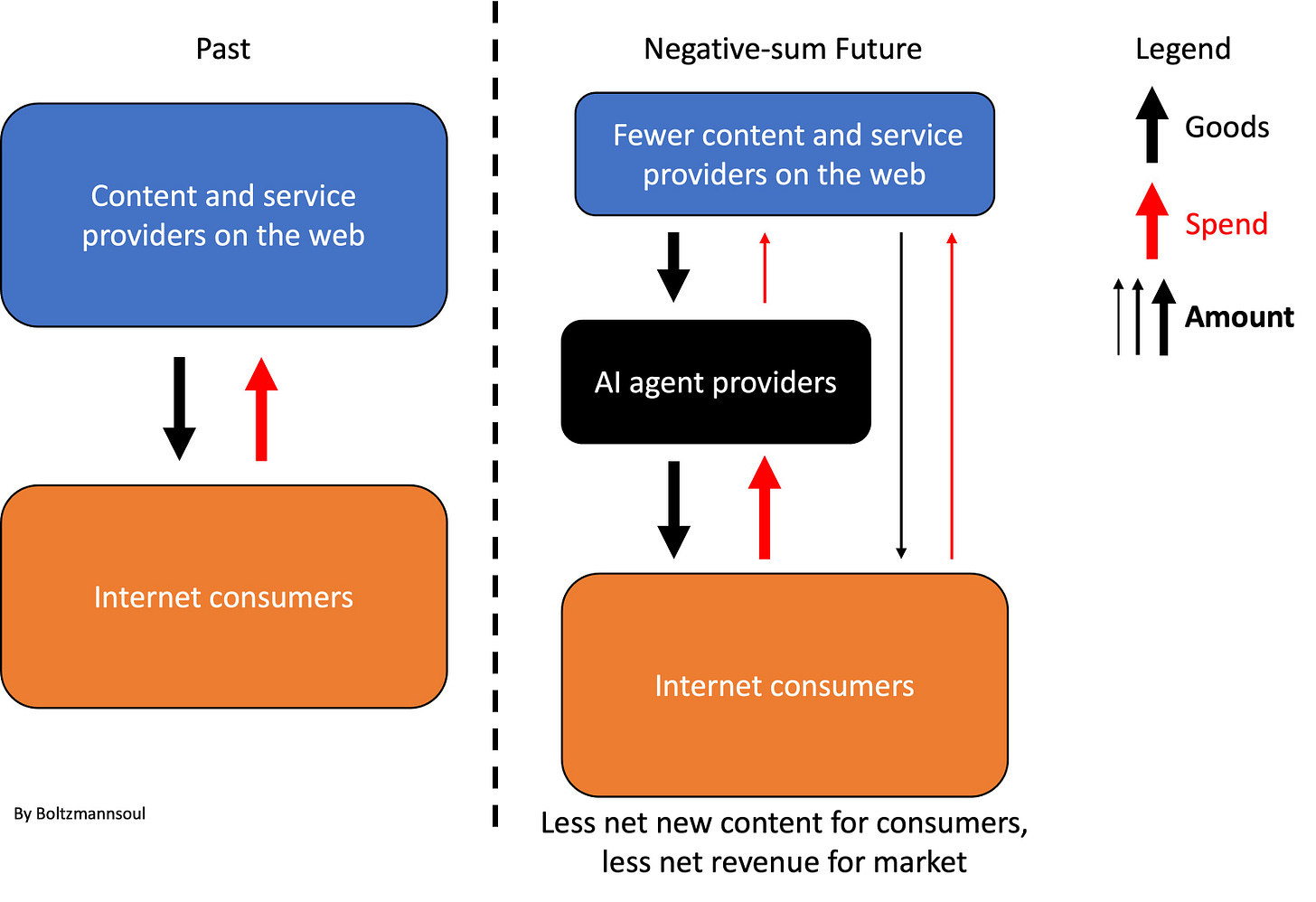

Existing Solutions Promote a Negative-Sum Outcome

Existing solutions are piecemeal and fail to address the holistic needs of this emerging market dynamic. As of July 2024, solutions have only emerged to discourage bad bot behavior. For example, CAPTCHA to block bot traffic, content poisoning through approaches like NightShade, or AI-shunning robots.txt supported by agent traffic analytics like Dark Visitors. However, these approaches only promote an arms race and a negative-sum game where neither FM vendors and agent providers nor web developers and content providers can monetize this competition. On the agent developer side, headless browsers like Browserless and Browserbase or packages like Beautiful Soup, which enhance agent scraping capabilities, will actually further promote bad behavior but do nothing to change the existing adversarial dynamic.

On the other side, FM vendors like OpenAI have been signing content deals with large content providers like the Financial Times and Time Magazine. However, it would be unrealistic to independently pursue content deals with the millions of smaller content providers, writers, and developers on the web. If pursued, this approach would likely shift negotiating power significantly to the buyer-side. In the long term, it may concentrate revenue to one camp while disincentivizing meaningful new content from being created.

Fig.3: Example illustration of a negative-sum future market dynamic.

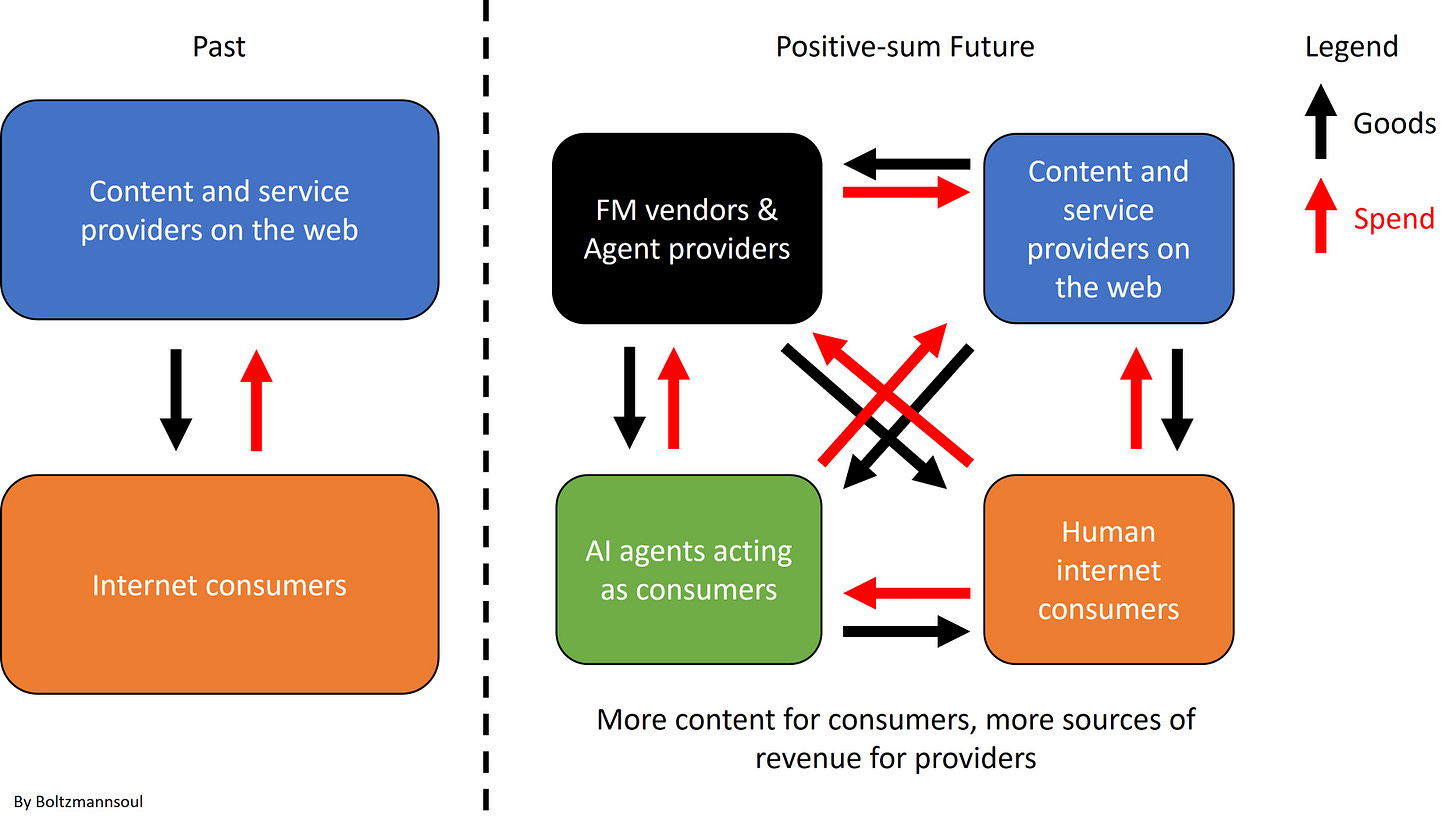

Much of the problem lies in the lack of infrastructure to enable good bot behavior and capture the economic value generated from agents’ share of consumption activities. Fundamentally, agents will need their own interfaces with the web not only to consume content, but also to operate, spend, and transact with each other. Recognizing agents as consumers and treating them as such could promote the development of a positive-sum Web 4.0 future with agentic markets.

Fig.4: Example illustration of a future Web 4.0 positive-sum market dynamic.

Agentic Markets

We arrive at one of the defining characteristics of Web 4.0—the emergence of agentic markets, which I define as markets where dynamics are jointly shaped by humans and agents, and can be expanded through the growth in agent participation.

Agent-Driven Market Dynamics

Research on agentic markets have started to appear, there’s even an ICML workshop on this topic coming up in 2 weeks. Agents will have a much greater impact on shaping market dynamics than algorithms and non-LM powered software robots (bots). Unlike algorithms and bots which are generally predictable and primarily implemented to perform predefined tasks much faster, agent actions may be emergent and unpredictable, potentially having much wider cascading effects.

For example, an algorithm or bot may be written to trade a particular stock based on technical and sentiment analysis, with second-order effects likely to be limited. In the future, an agent tasked with trading a particular stock may decide to implement high-frequency or medium-frequency scripts to lessen its own latency disadvantage. In the process, it may decide to, or accidentally, coordinate with other agents and humans to perform an illegal pump and dump on the stock or run disinformation campaigns to impact the price. In another scenario, agents may develop emergent behavior that leads to illegal price collusion between them. A recent real-life example comes from a professor at Wharton asking Devin to perform a task on Reddit (July 2024), and it automatically got to the point of trying to charge people without being asked. Importantly, policy and regulation alone are not enough to control emergent behaviors.

Some recent LLM research papers have started looking into mediating agent coordination, such as through normative modules devised by Sarkar et al., which enable agents to recognize and adapt to community-accepted behaviors (normative infrastructure) of a given environment and achieve more stable coordination.

Fig.5: Results showing that agents with the normative module can better identify which set of rules (authoritative institution) other agents are following, and align their actions to the set of accepted rules. Reproduced from the Normative Modules paper (May/June 2024).

The key point is that agents will have a much wider impact on market dynamics than bots due to their unpredictable behaviors.

Market Growth Driven by Agents

Aside from agent-driven dynamics, agentic markets will also be expandable through having more agents participate. This can happen far before agents become fully autonomous, as long as agents are capable of bringing economic profits through their existence and expansion. We can run this thought experiment by considering:

A case where fully autonomous agents exist

A case where only partially autonomous agents exist

In the first case, agents are responsible for their own existences. They have to find the means for their own LLM inference calls, memory storage costs, and any other services they use, such as some proprietary data platform that allows them to perform tasks that generate income. They somehow have full custody of their own monetary assets and are not beholden to a specific inference provider. As long as they continue to perform actions that bring them more income than their own operating costs, they can exist indefinitely. In this scenario, it is easy to see how autonomous agents can expand the market and economy by finding ways to generate value to prolong their own existences. Each autonomous agent would have the incentive to do so and contribute to the economy in some manner, much like most humans. The value generated could even be specific to other agents, not necessarily humans, creating yet-to-exist economic activities. Counter-intuitively, fully autonomous agents need not be artificial general intelligence (AGI)—depending on your definition, they need only be fully responsible for themselves!

In the second case, agents are not directly responsible for their own existence but need to indirectly incentivize whoever controls them to continue to run and utilize them (sounds a bit horrific, topic for another time). The net result is similar: over time, agents that find a way to prolong themselves will increase, and those that do not will decrease. As more agents adapt and find ways to create new economic value and consume goods and services in doing so, the market expands accordingly.

Thus, we can see that there is a real possibility that these agentic persistent entities (APEs? lol) will create entirely new economic activities that can truly expand the market, linking agent population to market growth. This path to universal basic income (UBI) and a post-scarcity future (if you believe in these) might be much faster than one with hardware robots and AGI/ASI. For the sake of brevity, we will consider socio-economic implications another time, including arguments on whether over the long term agent consumers may simply replace human consumers or actually add to the consumer pool.

So, what does it take for this to happen? Existing internet infrastructure is not capable of supporting agentic markets. The capabilities of agents aside, there is simply no easy way for agents to directly operate most things on the internet right now, and no way for them to pay for things autonomously yet.

The Path to Helpful AI Agents

Achieving AI agents with broad capabilities to navigate, operate applications, and assist humans in diverse tasks requires efforts in two main areas:

Improving LLM and AI agent capabilities and design

Making existing system/application interfaces more agent-friendly

So far, most academic and industrial efforts have focused on the first approach, and for good reason. As LMs become more multimodal, support longer context windows, and improve at handling needle-in-haystack problems, they will naturally become more capable of tackling generalized tasks. However, just as humans perform better with tools that have better UI/UX, the same will apply to AI agents.

Why Agent-Computer Interfaces (ACI) Matter

The future of the internet may lie in agent-computer interfaces (ACI). ACIs will enable seamless interaction between AI agents and web applications, allowing agents to operate applications without needing to navigate UIs and APIs designed for humans.

To illustrate why it matters to design interfaces specifically for agents, we can run another thought experiment. Consider an AI agent tasked with travel planning and booking, carrying out the same task through two different websites:

The first website has the usual UI/UX designed for humans only. The agent needs to explore different buttons and paths to carry out the task, requiring possibly up to a few hundred steps, associated inference calls, and context window utilization.

The second website has an interface designed specifically to serve agents. Through a command dictionary, tree map, and real-time feedback specific to the site, the agent is capable of completing the task within a dozen steps and inference calls.

From the above, it is easy to see why the second website will, over time, get a higher share of traffic and bookings from agents. It will be both cheaper to interact with (fewer steps, shorter context lengths, and fewer inference calls), have a higher success rate for the task, and likely require less human supervision. In fact, as LMs and agents get more powerful and autonomous, it is conceivable that they will be more inclined to pick sites and applications with more agent-friendly interfaces, much like how humans consider UI/UX when picking products.

To put this into perspective, in a recent paper by Tianbo Xie et al. they benchmarked various LLM and vision-language model (VLM) AI agents on a variety of computer and web based tasks. While humans can perform over 72.36% of the tasks, the best agent only managed 12.24%. They attributed agent failure primarily due to them struggling with GUI grounding and operational knowledge. Agents don’t know where to go in GUI, how to use applications, and instead randomly try menu items until their maximum number of steps. The authors also found that giving longer mouse movement/keyboard history in the context improves agent performance, but this can lead to inefficiencies and require very long context lengths very quickly.

Furthermore, from the table below, we see that agent success rates with tasks using command line interfaces (CLI), such as those related to the operating system (OS), tend to be much higher than other categories that use GUI (e.g., Office and workflow tasks). It is clear that interface design can have a significant impact on agent performance; ACIs can help bridge this gap.

Table 2: OS World benchmark of different LLM and VLMs across different task types and using different types of inputs. Reproduced from the OS World paper (April 2024).

Another data point on the impact of interface design comes from the recent Cradle paper (July 2024), where the authors built six modules to give agents the capability to achieve generalized computer control (GCC) through screenshot inputs. Despite achieving impressive performance on some tasks like video games, on the OS World benchmark, Cradle’s professional task and overall performance still fell short of the Ally tree approach with GPT-4 and 4o in the original OS World paper. This again implies that interface design can have greater impact on task performance than the agent design.

Table 3a: Cradle performance on OS World benchmark showing minimal improvement even in professional tasks when compared to baseline GPT-4o using Ally tree in original paper. Reproduced from the Cradle paper (July 2024).

Table 3b: OS World benchmark for Ally tree for comparison with Cradle. Reproduced from the OS World paper (April 2024).

Enablers of Web 4.0

There are two layers of ACIs that need to come to life for us to reach the starting point of agentic markets and Web 4.0:

Operational interfaces for agents

Payment services for agent-business interactions (ABI)

On the first layer, there are three approaches that are not mutually exclusive. The first approach is the naive approach whereby agent-builders or application developers build custom workflow integrations with a select number of tools or agents—similar to ChatGPT plugins, custom GPTs, or n8n.io. This approach is hard to scale and maintain. Undertaking it from the agent-builder side, it would require deals and custom engineering for each tool being brought on. From the application developer side, it would mean spending time optimizing and integrating with only a select few agent providers on their terms, and possibly getting locked in over time.

The second approach which has been gaining momentum is to build interfaces and modules that give agents better scraping capabilities and the ability to experiment. An example we have already mentioned is some sort of wrapper around browserless or other headless browsers e.g. Browserbase. However, as discussed, the bot blocking versus scraping game is a constant arms race and this approach will not be able to convince the powers that be—e.g., Cloudflare and Google—to let agents through to applications and sites consistently.

My contrarian view is that the ideal approach would be for ACIs to become an extension of existing web development and software engineering paradigms. Builders would not need to design their applications specifically for agents but could attach some sort of component or interface that allows agents to better operate what has been built. I think this approach will be the one to last, enable good agent behavior, and give rise to healthy, functional agentic markets. Directionally, it may include many of the elements outlined in the SWE-agent paper in some form:

A customizable command dictionary for agents to operate an application

Content viewer interfaces that go beyond text

Real-time feedback mechanisms on the agent’s actions

As for the payment layer, this is what will enable agents to pay and charge for goods and services. Without this infrastructure, agentic markets will be impossible. Two approaches will happen simultaneously: Web 3.0 and traditional payment rails.

Related solutions have already emerged within the Web 3.0 ecosystem through innovations like token-bound-accounts and the ERC-6551 token standard. From there, it is only a few hops to agent-custodial accounts where on-chain agents have ownership of their own currency wallets. However, the relatively limited size of the Web 3.0 market and the low adoption of cryptocurrency payments could become bottlenecks for growth. Although Luc from Cherry VC makes a compelling argument that in the future, if purely rational agents get to choose a financial system, they would opt for one where they can’t get deplatformed, that is always on, natively global, and where the terms of services are immutable and open source.

Simultaneously, payment infrastructure for AI agents based on traditional payment rails will also emerge. The first iterations are likely to be centralized credit-based systems, where AI agents do not own their own payment accounts. The challenge will be to set up a system that can enable cheap microtransactions and is easy to integrate for agent developers/providers.

These will be the minimum requirements needed to enable full-fledged agentic markets and become the foundation of Web 4.0. It’s likely that in the near future, ACIs will become ubiquitous and allow developers and content providers to charge agents directly for access and services, thereby growing agent-based revenue streams.

Conclusion

Web 4.0 will be characterized by AI operability, agentic markets, and AI consumerism. This evolution requires collaboration between developers, researchers, investors, and policymakers to build the necessary infrastructure and frameworks. The possibilities are endless, and although socioeconomic challenges may emerge, the positive impact on the economy would be hard to overstate. In my vision of Web 4.0, AIs will have a life of their own, driving, creating, consuming, and growing economic activity 24/7 based on tasks assigned to them and a drive to persist. If you are excited about this topic, please reach out; I’d love to chat!

Please subscribe and follow me on LinkedIn and X for more content!

Many thanks to my wife Yijin Wang and my friends Kevin Zhang, Hanyi Du, Robin Huang, Andrew Tan, Marc Mengler, Sheng Ho, Phillip An, Nadja Reischel, and Luc de Leyritz for reviewing this article and valuable suggestions!